Adversarial Attacks: Unterschied zwischen den Versionen

Aus exmediawiki

C.heck (Diskussion | Beiträge) |

C.heck (Diskussion | Beiträge) Keine Bearbeitungszusammenfassung |

||

| (13 dazwischenliegende Versionen von 2 Benutzern werden nicht angezeigt) | |||

| Zeile 1: | Zeile 1: | ||

Blog von Francis Hunger und Flupke mit schönen beispielen...: http://adversarial.io/blog/allgemein/ | |||

https://github.com/shangtse/robust-physical-attack | |||

https://spectrum.ieee.org/cars-that-think/transportation/sensors/slight-street-sign-modifications-can-fool-machine-learning-algorithms | |||

https://github.com/ifding/adversarial-examples/blob/master/notebooks/adversarial.ipynb | |||

https://arxiv.org/pdf/1712.09665.pdf | |||

https://github.com/zentralwerkstatt/adversarial/blob/master/adversarial.ipynb | |||

https://christophm.github.io/interpretable-ml-book/adversarial.html | |||

https://b-ok.cc/book/5260920/fee7e3 < book: Strengthening Deep Neural Networks: Making AI Less Susceptible to Adversarial Trickery | |||

---- | |||

https://towardsdatascience.com/perhaps-the-simplest-introduction-of-adversarial-examples-ever-c0839a759b8d | |||

--- | |||

* A Complete List of All (arXiv) Adversarial Example Papers https://nicholas.carlini.com/writing/2019/all-adversarial-example-papers.html | |||

* Praxis-Beispiele: https://boingboing.net/tag/adversarial-examples | * Praxis-Beispiele: https://boingboing.net/tag/adversarial-examples | ||

* https://bdtechtalks.com/2018/12/27/deep-learning-adversarial-attacks-ai-malware/ | * https://bdtechtalks.com/2018/12/27/deep-learning-adversarial-attacks-ai-malware/ | ||

| Zeile 6: | Zeile 27: | ||

* https://en.wikipedia.org/wiki/Deep_learning#Cyberthreat | * https://en.wikipedia.org/wiki/Deep_learning#Cyberthreat | ||

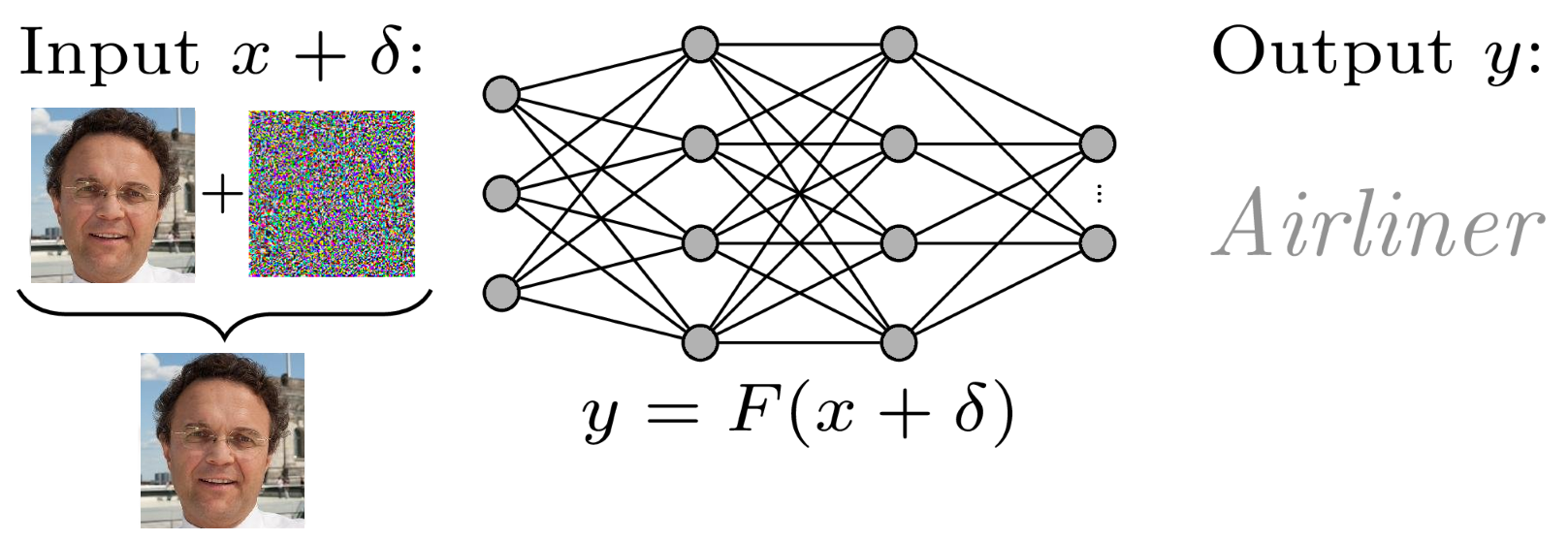

[[File: | [[File:Adv-attack.png|800]] | ||

=notes= | |||

https://github.com/tensorflow/cleverhans | |||

=WHITE BOX ATTACKS= | =WHITE BOX ATTACKS= | ||

* https://cv-tricks.com/how-to/breaking-deep-learning-with-adversarial-examples-using-tensorflow/ | * https://cv-tricks.com/how-to/breaking-deep-learning-with-adversarial-examples-using-tensorflow/ | ||

| Zeile 18: | Zeile 42: | ||

===Fast Gradient Sign Method(FGSM)=== | ===Fast Gradient Sign Method(FGSM)=== | ||

FGSM is a single step attack, ie.. the perturbation is added in a single step instead of adding it over a loop (Iterative attack). | FGSM is a single step attack, ie.. the perturbation is added in a single step instead of adding it over a loop (Iterative attack). | ||

* https://www.tensorflow.org/beta/tutorials/generative/adversarial_fgsm | |||

* https://arxiv.org/pdf/1412.6572.pdf | |||

** https://github.com/soumyac1999/FGSM-Keras | |||

===Basic Iterative Method=== | ===Basic Iterative Method=== | ||

| Zeile 39: | Zeile 66: | ||

** Jupyter Notebook: https://github.com/oscarknagg/adversarial/blob/master/notebooks/Creating_And_Defending_From_Adversarial_Examples.ipynb | ** Jupyter Notebook: https://github.com/oscarknagg/adversarial/blob/master/notebooks/Creating_And_Defending_From_Adversarial_Examples.ipynb | ||

=WHITE/BLACK BOX ATTACKS= | =WHITE/BLACK BOX ATTACKS= | ||

==on voice (ASR)== | ==on voice (ASR)== | ||

| Zeile 70: | Zeile 95: | ||

* https://boingboing.net/2019/03/31/mote-in-cars-eye.html | * https://boingboing.net/2019/03/31/mote-in-cars-eye.html | ||

** Paper vom Forschungsteam: https://keenlab.tencent.com/en/whitepapers/Experimental_Security_Research_of_Tesla_Autopilot.pdf | ** Paper vom Forschungsteam: https://keenlab.tencent.com/en/whitepapers/Experimental_Security_Research_of_Tesla_Autopilot.pdf | ||

===Another Attack Against Driverless Cars=== | |||

<u>Schneier News:</u> | |||

In this piece of research, attackers [https://arxiv.org/pdf/1906.09765.pdf successfully attack] a driverless car system -- Renault Captur's "Level 0" autopilot (Level 0 systems advise human drivers but do not directly operate cars) -- by following them with drones that project images of fake road signs in 100ms bursts. The time is too short for human perception, but long enough to fool the autopilot's sensors. | |||

Boing Boing [https://boingboing.net/2019/07/06/flickering-car-ghosts.html post]. | |||

---- | ---- | ||

==on voice (ASR)== | ==on voice (ASR)== | ||

* https://www.the-ambient.com/features/weird-ways-echo-can-be-hacked-how-to-stop-it-231 | * https://www.the-ambient.com/features/weird-ways-echo-can-be-hacked-how-to-stop-it-231 | ||

| Zeile 99: | Zeile 133: | ||

==Anti Surveillance== | ==Anti Surveillance== | ||

http://dismagazine.com/dystopia/evolved-lifestyles/8115/anti-surveillance-how-to-hide-from-machines/ | http://dismagazine.com/dystopia/evolved-lifestyles/8115/anti-surveillance-how-to-hide-from-machines/ | ||

===How to Disappear Completely=== | |||

https://www.youtube.com/watch?v=LOulCAz4S0M talk by Lilly Ryan at linux.conf.au 2019 — Christchurch, New Zealand | |||

---- | ---- | ||

| Zeile 104: | Zeile 141: | ||

* https://github.com/bethgelab | * https://github.com/bethgelab | ||

* https://github.com/tensorflow/cleverhans | * https://github.com/tensorflow/cleverhans | ||

---- | |||

=Detection through [https://exmediawiki.khm.de/exmediawiki/index.php?title=XAI_/_Language_Models

I XAI]= | |||

When [https://exmediawiki.khm.de/exmediawiki/index.php?title=XAI_/_Language_Models

I Explainability] Meets Adversarial Learning: Detecting Adversarial Examples using SHAP Signatures: https://arxiv.org/pdf/1909.03418.pdf | |||

Using [https://exmediawiki.khm.de/exmediawiki/index.php?title=XAI_/_Language_Models

I Explainabilty] to Detect Adversarial Attacks: https://openreview.net/pdf?id=B1xu6yStPH | |||

[[Category:Hacking]] | |||

[[Category:KI]] | |||

[[Category: deep learning]] | |||

[[Category:Programmierung]] | |||

[[Category:Python]] | |||

[[Category:Tensorflow]] | |||

[[Category:Keras]] | |||

[[Kategorie:Adversarial Attack]] | |||

Aktuelle Version vom 17. Dezember 2020, 16:43 Uhr

Blog von Francis Hunger und Flupke mit schönen beispielen...: http://adversarial.io/blog/allgemein/

https://github.com/shangtse/robust-physical-attack

https://github.com/ifding/adversarial-examples/blob/master/notebooks/adversarial.ipynb

https://arxiv.org/pdf/1712.09665.pdf

https://github.com/zentralwerkstatt/adversarial/blob/master/adversarial.ipynb

https://christophm.github.io/interpretable-ml-book/adversarial.html

https://b-ok.cc/book/5260920/fee7e3 < book: Strengthening Deep Neural Networks: Making AI Less Susceptible to Adversarial Trickery

- A Complete List of All (arXiv) Adversarial Example Papers https://nicholas.carlini.com/writing/2019/all-adversarial-example-papers.html

- Praxis-Beispiele: https://boingboing.net/tag/adversarial-examples

- https://bdtechtalks.com/2018/12/27/deep-learning-adversarial-attacks-ai-malware/

- https://www.dailydot.com/debug/ai-malware/

notes

https://github.com/tensorflow/cleverhans

WHITE BOX ATTACKS

- https://cv-tricks.com/how-to/breaking-deep-learning-with-adversarial-examples-using-tensorflow/

- Paper »ADVERSARIAL EXAMPLES IN THE PHYSICAL WORLD«: https://arxiv.org/pdf/1607.02533.pdf

Untargeted Adversarial Attacks

Adversarial attacks that just want your model to be confused and predict a wrong class are called Untargeted Adversarial Attacks.

- nicht zielgerichtet

Fast Gradient Sign Method(FGSM)

FGSM is a single step attack, ie.. the perturbation is added in a single step instead of adding it over a loop (Iterative attack).

- https://www.tensorflow.org/beta/tutorials/generative/adversarial_fgsm

- https://arxiv.org/pdf/1412.6572.pdf

Basic Iterative Method

Störung, anstatt in einem einzelnen Schritt in mehrere kleinen Schrittgrößen anwenden

Iterative Least-Likely Class Method

ein Bild erstellen, welches in der Vorhersage den niedrigsten Score trägt

Targeted Adversarial Attacks

Attacks which compel the model to predict a (wrong) desired output are called Targeted Adversarial attacks

- zielgerichtet

(Un-)Targeted Adversarial Attacks

kann beides...

Projected Gradient Descent (PGD)

Eine Störung finden die den Verlust eines Modells bei einer bestimmten Eingabe maximiert:

WHITE/BLACK BOX ATTACKS

on voice (ASR)

Psychoacoustic Hiding (Attacking Speech Recognition)

BLACK BOX ATTACKS

- https://medium.com/@ml.at.berkeley/tricking-neural-networks-create-your-own-adversarial-examples-a61eb7620fd8

- Jupyter Notebook: https://github.com/dangeng/Simple_Adversarial_Examples

on computer vision

propose zeroth order optimization (ZOO)

- attacks to directly estimate the gradients of the targeted DNN

Black-Box Attacks using Adversarial Samples

- a technique that uses the victim model as an oracle to label a synthetic training set for the substitute, so the attacker need not even collect a training set to mount the attack

new Tesla Hack

- https://spectrum.ieee.org/cars-that-think/transportation/self-driving/three-small-stickers-on-road-can-steer-tesla-autopilot-into-oncoming-lane

- https://boingboing.net/2019/03/31/mote-in-cars-eye.html

- Paper vom Forschungsteam: https://keenlab.tencent.com/en/whitepapers/Experimental_Security_Research_of_Tesla_Autopilot.pdf

Another Attack Against Driverless Cars

Schneier News:

In this piece of research, attackers successfully attack a driverless car system -- Renault Captur's "Level 0" autopilot (Level 0 systems advise human drivers but do not directly operate cars) -- by following them with drones that project images of fake road signs in 100ms bursts. The time is too short for human perception, but long enough to fool the autopilot's sensors.

Boing Boing post.

on voice (ASR)

- https://www.theregister.co.uk/2016/07/11/siri_hacking_phones/

- https://www.fastcompany.com/90240975/alexa-can-be-hacked-by-chirping-birds

BLACK BOX / WHITE BOX ATTACKS

on voice (ASR)

Psychoacoustic Hiding (Attacking Speech Recognition)

on written text (NLP)

paraphrasing attacks

- https://venturebeat.com/2019/04/01/text-based-ai-models-are-vulnerable-to-paraphrasing-attacks-researchers-find/

- https://bdtechtalks.com/2019/04/02/ai-nlp-paraphrasing-adversarial-attacks/

Anti Surveillance

http://dismagazine.com/dystopia/evolved-lifestyles/8115/anti-surveillance-how-to-hide-from-machines/

How to Disappear Completely

https://www.youtube.com/watch?v=LOulCAz4S0M talk by Lilly Ryan at linux.conf.au 2019 — Christchurch, New Zealand

libraries

Detection through XAI

When Explainability Meets Adversarial Learning: Detecting Adversarial Examples using SHAP Signatures: https://arxiv.org/pdf/1909.03418.pdf

Using Explainabilty to Detect Adversarial Attacks: https://openreview.net/pdf?id=B1xu6yStPH