Adversarial Attacks

Aus exmediawiki

- Praxis-Beispiele: https://boingboing.net/tag/adversarial-examples

- https://bdtechtalks.com/2018/12/27/deep-learning-adversarial-attacks-ai-malware/

- https://www.dailydot.com/debug/ai-malware/

WHITE BOX ATTACKS

- https://cv-tricks.com/how-to/breaking-deep-learning-with-adversarial-examples-using-tensorflow/

- Paper »ADVERSARIAL EXAMPLES IN THE PHYSICAL WORLD«: https://arxiv.org/pdf/1607.02533.pdf

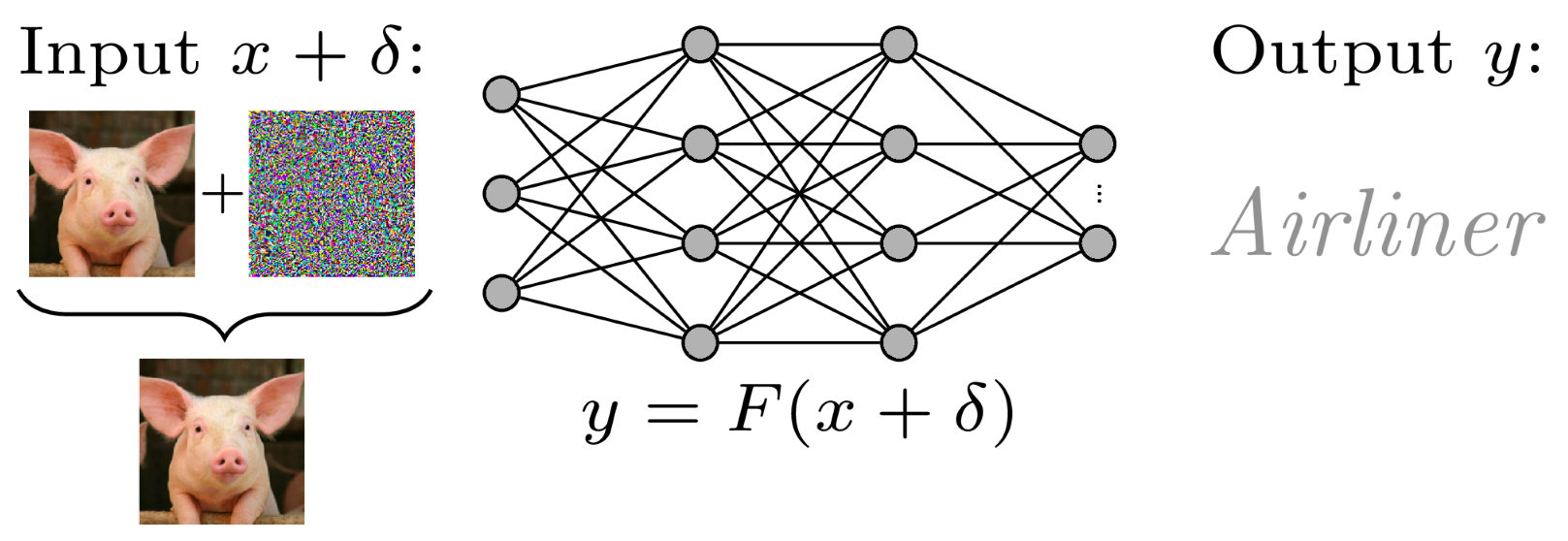

Untargeted Adversarial Attacks

Adversarial attacks that just want your model to be confused and predict a wrong class are called Untargeted Adversarial Attacks.

- nicht zielgerichtet

Fast Gradient Sign Method(FGSM)

FGSM is a single step attack, ie.. the perturbation is added in a single step instead of adding it over a loop (Iterative attack).

Basic Iterative Method

Störung, anstatt in einem einzelnen Schritt in mehrere kleinen Schrittgrößen anwenden

Iterative Least-Likely Class Method

ein Bild erstellen, welches in der Vorhersage den niedrigsten Score trägt

Targeted Adversarial Attacks

Attacks which compel the model to predict a (wrong) desired output are called Targeted Adversarial attacks

- zielgerichtet

(Un-)Targeted Adversarial Attacks

kann beides...

Projected Gradient Descent (PGD)

Eine Störung finden die den Verlust eines Modells bei einer bestimmten Eingabe maximiert:

WHITE/BLACK BOX ATTACKS

on voice (ASR)

Psychoacoustic Hiding (Attacking Speech Recognition)

BLACK BOX ATTACKS

- https://medium.com/@ml.at.berkeley/tricking-neural-networks-create-your-own-adversarial-examples-a61eb7620fd8

- Jupyter Notebook: https://github.com/dangeng/Simple_Adversarial_Examples

on computer vision

propose zeroth order optimization (ZOO)

- attacks to directly estimate the gradients of the targeted DNN

Black-Box Attacks using Adversarial Samples

- a technique that uses the victim model as an oracle to label a synthetic training set for the substitute, so the attacker need not even collect a training set to mount the attack

new Tesla Hack

- https://spectrum.ieee.org/cars-that-think/transportation/self-driving/three-small-stickers-on-road-can-steer-tesla-autopilot-into-oncoming-lane

- https://boingboing.net/2019/03/31/mote-in-cars-eye.html

- Paper vom Forschungsteam: https://keenlab.tencent.com/en/whitepapers/Experimental_Security_Research_of_Tesla_Autopilot.pdf

on voice (ASR)

- https://www.theregister.co.uk/2016/07/11/siri_hacking_phones/

- https://www.fastcompany.com/90240975/alexa-can-be-hacked-by-chirping-birds

BLACK BOX / WHITE BOX ATTACKS

on voice (ASR)

Psychoacoustic Hiding (Attacking Speech Recognition)

on written text (NLP)

paraphrasing attacks

- https://venturebeat.com/2019/04/01/text-based-ai-models-are-vulnerable-to-paraphrasing-attacks-researchers-find/

- https://bdtechtalks.com/2019/04/02/ai-nlp-paraphrasing-adversarial-attacks/

Anti Surveillance

http://dismagazine.com/dystopia/evolved-lifestyles/8115/anti-surveillance-how-to-hide-from-machines/